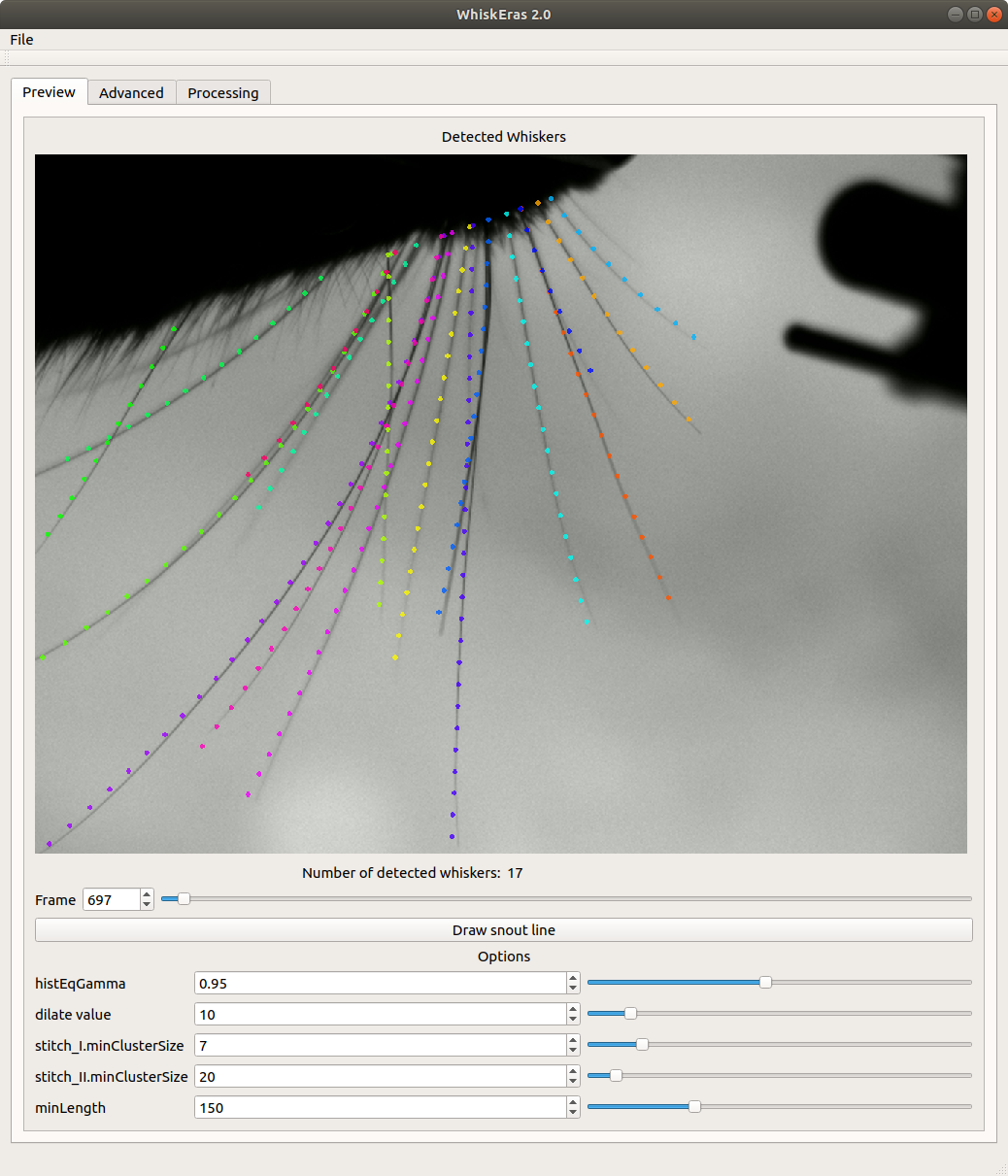

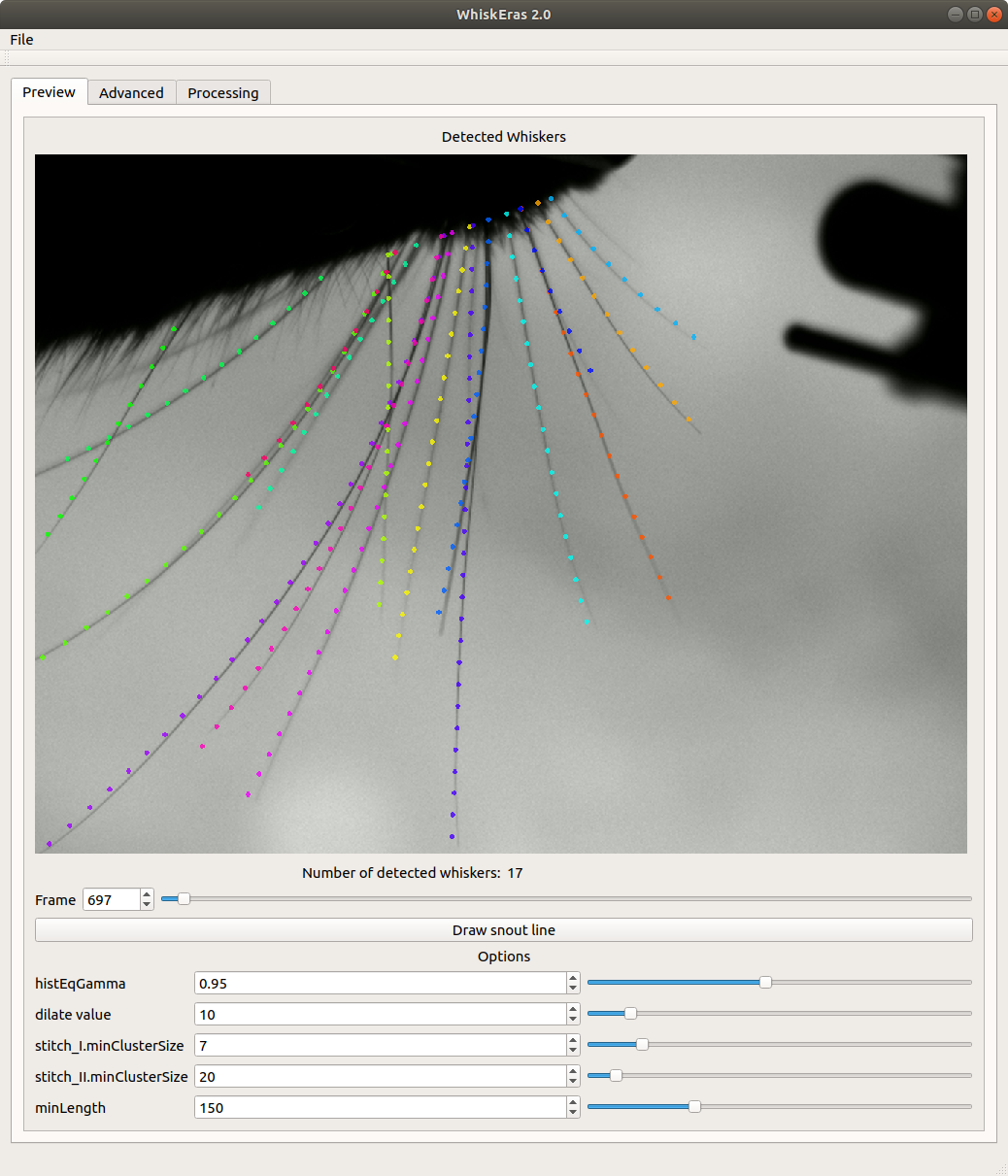

WhiskEras 2.0

Whisker Tracking on Head-Fixed Rodents

The rodent whisker system is a prominent neuroscientific experimental subject for the study of sensorimotor integration and active sensing. The precise quantification of whisker movement needs persistent optimizations, acceleration, and superior user-friendliness.

WhiskEras 2.0 is a whisker-tracking algorithm, designed to track whiskers on untrimmed, head-restrained rodents. It contains two modules: 1. A Detection Module that finds whiskers frame-by-frame on a video recorded during the whisking experiments. This module uses Computer-Vision-based algorithms. 2. A Tracking Module that tracks whiskers over time. This module makes use of a data tracking algorithm and a Machine-Learning algorithm that deploys SVMs. The algorithm can process videos at more than 50 FPS, depending on the video specifications, while requiring minimal human effort to provide state-of-the-art results, to-date.

01

A Detection Module

A Detection Module which finds whiskers frame-by-frame on a video recorded during the whisking experiments. This module uses Computer-Vision-based algorithms.

LEARN MORE

02

A Tracking Module

A Tracking Module which tracks whiskers over time. This module makes use of a data tracking algorithm and a Machine-Learning algorithm which deploys SVMs. The algorithm can process videos at more than 50 FPS, depending on the video specifications, while requiring minimal human effort to provide state-of-the-art results, to-date.

LEARN MORE

Getting Started

To get a local copy up and running follow these simple steps.

Prerequisites

This algorithm is intended for processing videos recorded during experiments with head-restrained rodents, focused on their whiskers.

Using it on videos where the head of the rodent is moving freely will not provide accurate results and may even cause the program to crash. In addition, the background of the video should be stable for the whole duration of whisker-tracking, meaning that only whiskers should ideally be moving, while no lighting conditions are altered or external objects are moving.

Pre-Installation

Install: CUDA - OpenCV with CUDA support - OpenBLAS (or LAPACK) - Qt5

The project has only been tested on Ubuntu, CUDA-capable, systems thus far. This means that, in order to run WhiskEras 2.0 you will need a linux system and a CUDA-capable GPU, if you are going to use a GPU at all.

Installation

There are two ways to run WhiskEras 2.0

Either you can directly run the pre-built binary executable, or build it yourself. You can try to directly run the executable, but if some required package is missing from your system, it will probably not run. In that case, it is better to try and build it, see the errors and address them correspondingly.

LEARN MORE

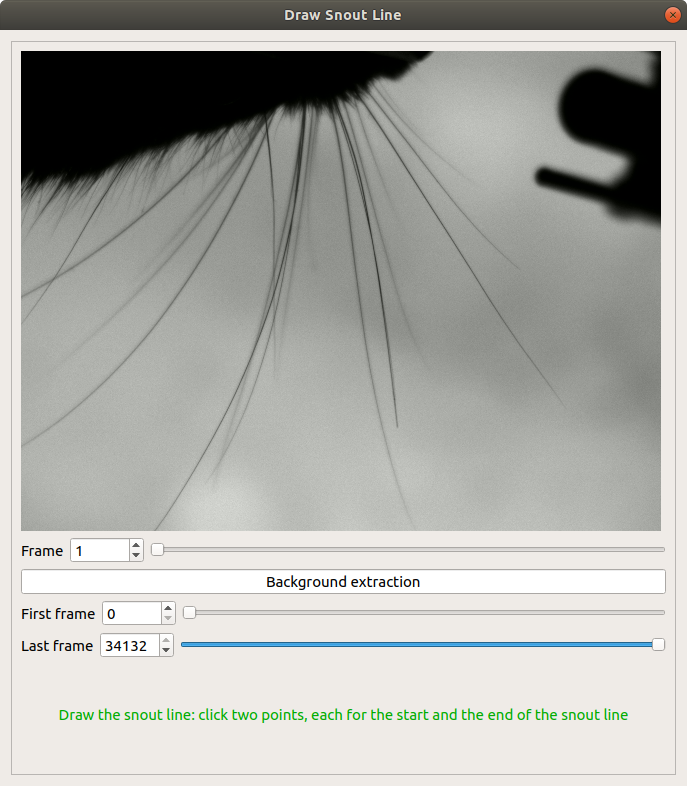

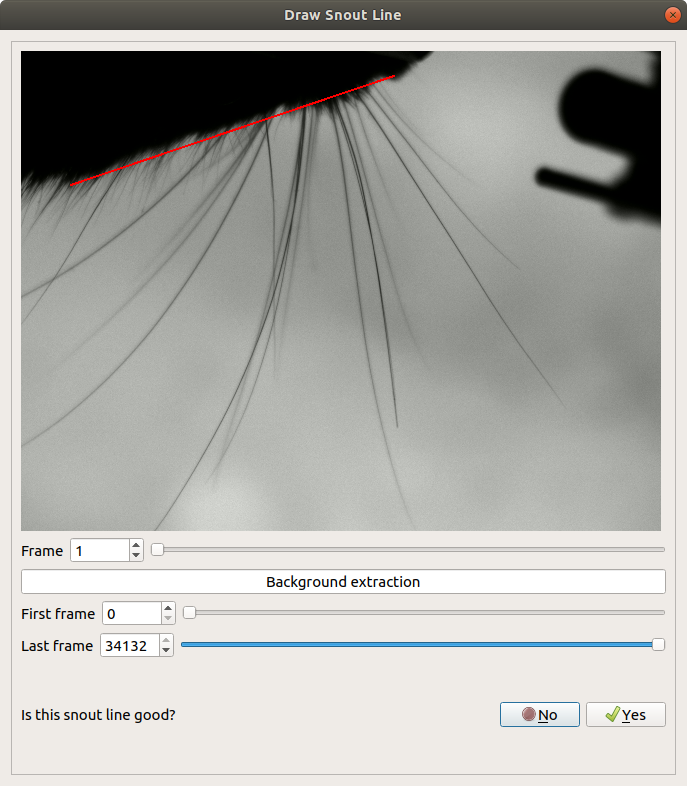

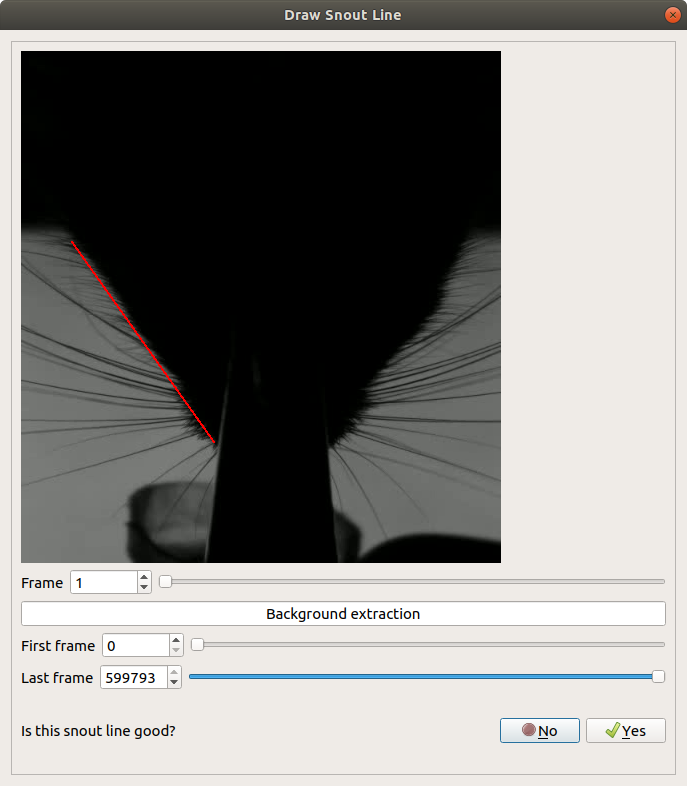

Usage

Its use is very simple and we will analyze it step by step in the Usage section

USAGE

Distributed under the GPL license

See the LICENSE section for more information.

LICENSE

This project was developed by the Neuro Computing Lab(NCL) of the Neuroscience Department, Erasmus MC Rotterdam

It embodies an accelerated-C++ version of WhiskEras 2.0, an improved version of the original WhiskEras.

BUILT WITH:

CUDA

Parallel computing platform and application programming interface that allows software to use NVIDIA-GPU-capabilities.

OpenCV

Open Source Computer Vision Library.

OpenCV extra modules (CUDA)

OpenCV extra modules, including CUDA support.

Eigen

Eigen is a C++ template library for linear algebra: matrices, vectors, numerical solvers, and related algorithms.

LibLinear

An open source library for large-scale linear classification.

Qt

Widget toolkit for creating graphical user interfaces as well as cross-platform applications that run on various software and hardware platforms.